This article elucidates the concept of reliability in research, emphasizing its paramount significance for clinical success. By highlighting how consistent measurement methods bolster the validity of findings, it captures the reader's attention and establishes the relevance of this topic to clinical research.

Furthermore, the article delves into various types of reliability, including:

It illustrates their applications in ensuring dependable results that are vital for patient safety and effective medical practices. The insights provided underscore the necessity of reliability in fostering trustworthy outcomes in clinical settings.

Ultimately, this discussion reinforces the critical nature of collaboration in addressing challenges within the Medtech landscape, paving the way for actionable next steps in enhancing research reliability.

Understanding the definition of reliability in research is essential for ensuring that medical studies produce trustworthy and replicable results. As the backbone of effective clinical investigations, reliability enhances the validity of findings and safeguards patient safety and treatment efficacy. Yet, a significant challenge persists: how can researchers consistently achieve high levels of reliability amidst the complexities of varying methodologies and data integrity? This article explores the essence of reliability in research, examining its definitions, significance, and practical applications in the pursuit of clinical success.

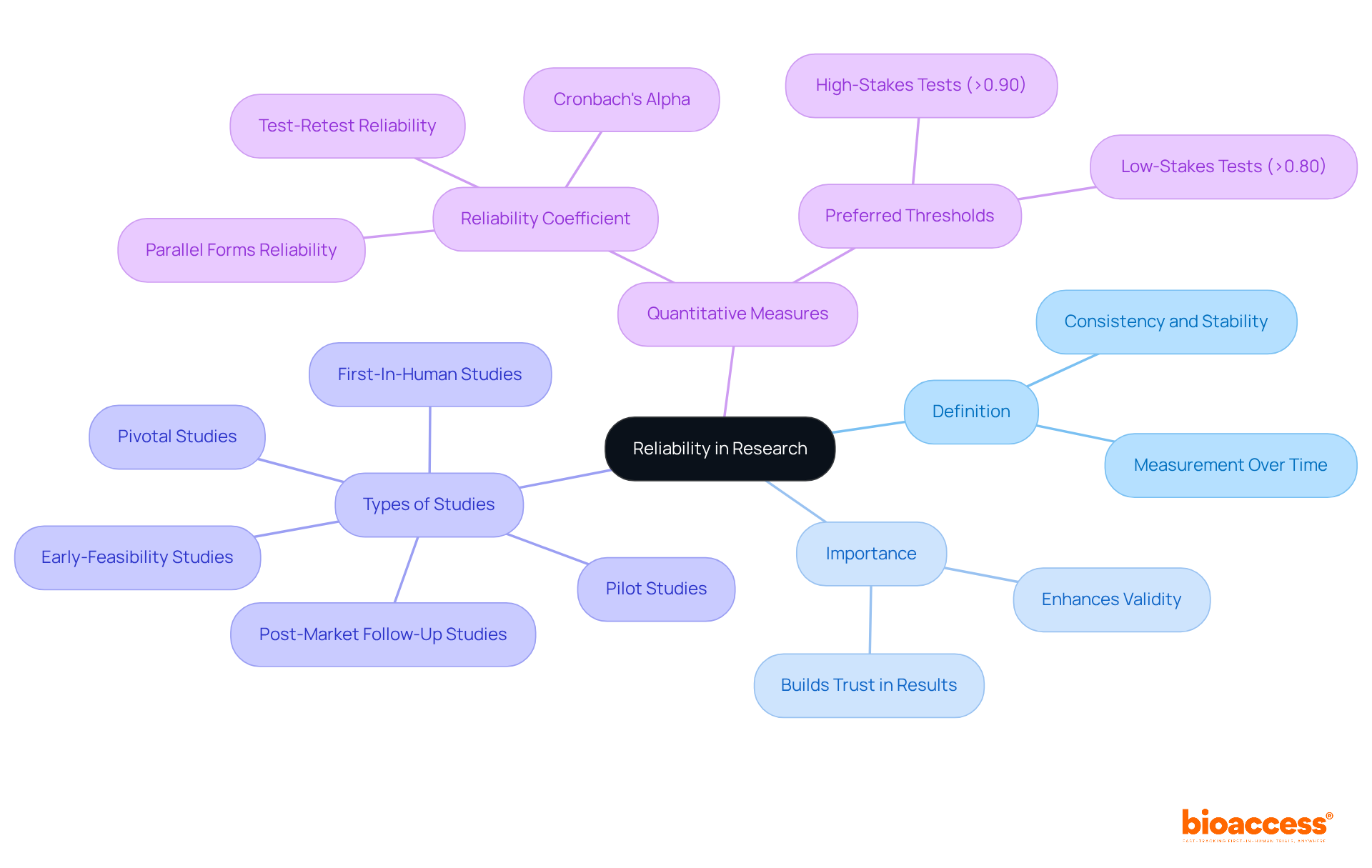

The reliability definition in research indicates the consistency and stability of measurements over time, across different instruments, and among various observers. It evaluates whether a methodology can consistently yield the same outcomes under controlled conditions. For example, in research trials assessing blood pressure, if repeated measurements consistently produce similar results, the method is deemed dependable. This principle is crucial in medical investigations, as it bolsters the replicability and the reliability definition in research of findings, thereby enhancing the overall validity of the analysis. High dependability is particularly vital in medical studies, as the reliability definition in research emphasizes that inconsistent outcomes can lead to misleading conclusions and undermine the credibility of the research.

At bioaccess®, we recognize that the reliability definition in research trials is paramount for success. Our extensive trial management services, including Accelerated Medical Device Study Services in Latin America, cover:

With over 20 years of expertise in Medtech, we ensure that our methodologies yield consistent and reliable results. Our specialized knowledge and adaptability in managing research trials empower us to address the unique challenges of each study. The reliability definition in research is often quantified using coefficients, with a preferred threshold of above 0.90 for high-stakes tests and above 0.80 for low-stakes tests, ensuring that results are both valid and applicable in broader contexts. As highlighted in academic literature, the reliability definition in research fundamentally measures consistency, which is essential for establishing trust in experimental results and fostering medical success.

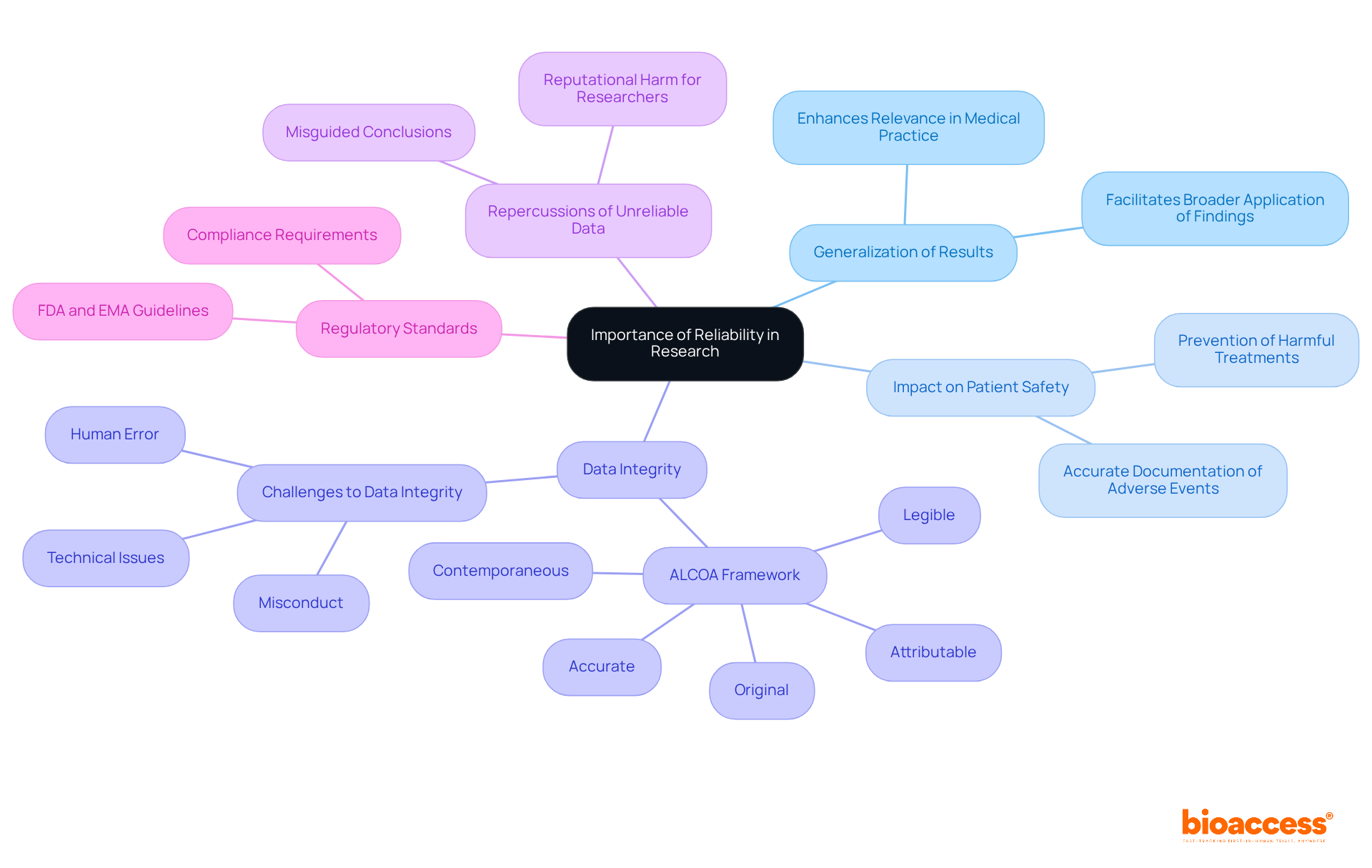

The significance of dependability in studies is paramount, as it underpins the ability to generalize results to broader populations, thereby enhancing the relevance of outcomes in medical practice. In medical studies, where patient safety and treatment efficacy are critical, unreliable information can lead to misguided conclusions and potentially harmful decisions. Research has shown that data integrity directly impacts patient safety by ensuring accurate documentation of adverse events; failure to maintain this integrity can result in harmful treatments being approved or beneficial ones being overlooked. Moreover, manipulated or falsified data can compromise the scientific validity of clinical studies, underscoring the vital role of data integrity in safeguarding patient safety. Establishing the reliability definition in research is thus an essential step in the investigative process, ensuring that analyses can be replicated and that their results remain valid in practical applications.

The principles of data integrity, encapsulated in the acronym ALCOA—Attributable, Legible, Contemporaneous, Original, and Accurate—provide a clear framework for understanding the standards that underpin dependable studies. The repercussions of unreliable data are evident across various case studies, where the misclassification of legitimate studies has led to erroneous conclusions and reputational harm for researchers. Clinical study directors emphasize that maintaining high standards of dependability is not merely a moral obligation but a fundamental requirement for protecting patient well-being and advancing medical knowledge. Regulatory bodies, such as the FDA and EMA, establish criteria for data integrity standards, further highlighting the importance of trustworthiness in clinical studies.

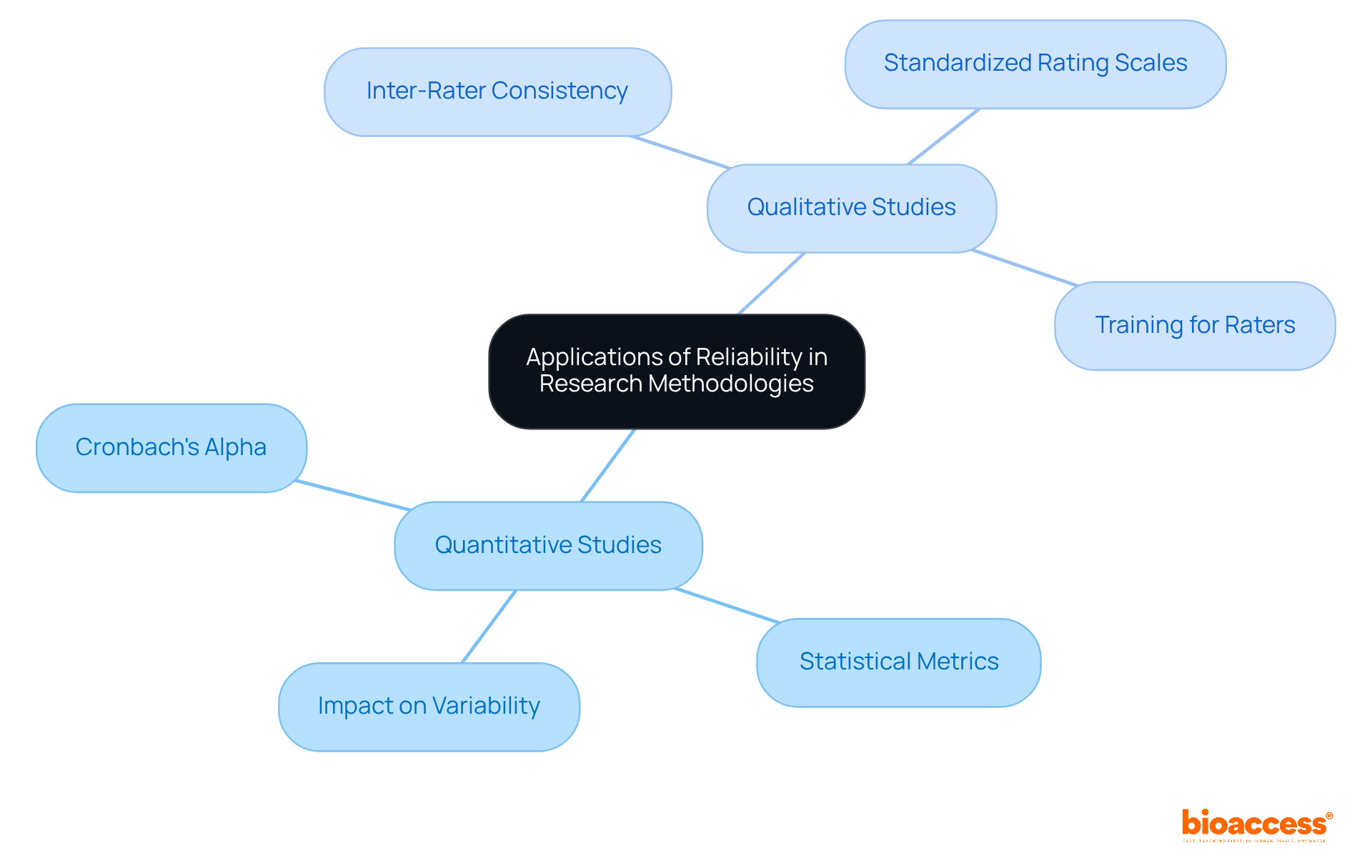

The reliability definition in research stands as a cornerstone of investigative methodologies, playing a crucial role in both quantitative and qualitative studies. In quantitative studies, dependability is often evaluated using statistical metrics such as Cronbach's alpha, which assesses the internal consistency of survey items, ensuring they effectively measure the same underlying construct. In qualitative research, inter-rater consistency becomes essential, particularly when multiple observers evaluate the same phenomenon.

Consider research studies assessing new drugs; employing several reviewers to analyze patient reactions can significantly enhance the consistency of results. Studies reveal that when clinicians utilize standardized rating scales, variability in diagnoses can decrease dramatically—from 15% to just 3%. This underscores the critical nature of inter-rater reliability in healthcare environments, highlighting the reliability definition in research, where consistent interpretations are vital for maintaining the integrity of trial outcomes.

Furthermore, rigorous training and clear definitions for raters can elevate inter-rater agreement, as evidenced by a study where post-training agreement soared to 90%, measured by Krippendorff’s alpha. Such measures not only enhance the reliability definition in research but also safeguard the validity of conclusions drawn from medical trials.

In the realm of trials overseen by bioaccess®, ensuring dependability is paramount. By employing stringent methodologies and comprehensive clinical trial management services—including feasibility assessments, site selection, and compliance evaluations—bioaccess® enhances the reliability of trial results, ultimately supporting the success of medical device development in Latin America.

The reliability definition in research is essential for ensuring the credibility of findings, encompassing several distinct types, each serving unique purposes.

Test-Retest Reliability measures the consistency of results when the same test is administered to the same subjects at different times. This type is particularly crucial for assessments expected to remain stable over time. Research indicates that test-retest consistency coefficients can fluctuate, with acceptable figures generally ranging from 0.73 to 0.92. Specifically, the test-retest consistency for the original measure at three weeks is .85, providing a clear benchmark for researchers.

Inter-Rater Reliability assesses the degree of agreement between different observers measuring the same phenomenon. It is vital in studies involving subjective judgments, such as clinical assessments. High inter-rater consistency is indicated by Intraclass Correlation Coefficients (ICC) above 0.75, while values below 0.40 suggest poor agreement. For instance, a surgical resident's performance assessment may yield a 75% consensus rate among evaluators, emphasizing the necessity for uniform grading methods to improve dependability. Rater agreement is deemed poor if ICC < 0.40, good if ICC is between 0.40 and 0.75, and excellent if ICC > 0.75.

Parallel-Forms Reliability involves comparing two different forms of the same test to determine if they yield similar results. This type is particularly useful when researchers aim to minimize the effects of practice or fatigue on test performance. High correlation between the two forms indicates strong parallel-forms consistency, essential for ensuring that different versions of a test measure the same construct effectively.

Internal Consistency evaluates the consistency of results across items within a single test, often assessed using statistical methods like Cronbach's alpha. A Cronbach's alpha of 0.7 is generally considered acceptable for lower-stakes assessments, while values above 0.9 are preferred for high-stakes evaluations, such as national licensure exams. This measure ensures that all items in a test represent the same underlying construct, thereby improving the consistency of the evaluation.

Understanding the reliability definition in research is crucial for researchers in clinical settings, as it guides the selection of appropriate methodologies to ensure the robustness and credibility of their findings.

Understanding the definition of reliability in research is fundamental to ensuring the credibility and effectiveness of clinical studies. Consistency and stability in measurements enhance the validity of findings and safeguard patient safety and treatment efficacy. The reliability of research methodologies is essential for generating trustworthy results that can be replicated and applied in real-world medical scenarios.

Key points highlight the critical nature of reliability across various research methodologies, including both quantitative and qualitative approaches. Different types of reliability—such as test-retest, inter-rater, parallel-forms, and internal consistency—each serve a unique purpose in maintaining the integrity of clinical studies. The role of data integrity and adherence to established standards is underscored as a means to prevent misleading conclusions that could adversely affect patient care.

The implications of reliability extend beyond research, influencing the advancement of medical knowledge and practices. Researchers and clinical practitioners must prioritize reliability in their studies to foster trust in their findings and contribute to the overall improvement of healthcare outcomes. By committing to rigorous methodologies and maintaining high standards of dependability, the medical community can ensure that research not only informs but also enhances the quality of patient care.

What does reliability mean in research?

Reliability in research refers to the consistency and stability of measurements over time, across different instruments, and among various observers. It evaluates whether a methodology can consistently yield the same outcomes under controlled conditions.

Why is reliability important in medical research?

Reliability is crucial in medical research because it enhances the replicability and validity of findings. Inconsistent outcomes can lead to misleading conclusions and undermine the credibility of the research.

How is reliability quantified in research?

Reliability is often quantified using coefficients, with a preferred threshold of above 0.90 for high-stakes tests and above 0.80 for low-stakes tests, ensuring that results are both valid and applicable in broader contexts.

What types of studies does bioaccess® conduct to ensure reliability?

bioaccess® conducts various types of studies to ensure reliability, including Early-Feasibility Studies, First-In-Human Studies, Pilot Studies, Pivotal Studies, and Post-Market Follow-Up Studies.

How long has bioaccess® been involved in Medtech research?

bioaccess® has over 20 years of expertise in Medtech, ensuring that their methodologies yield consistent and reliable results.

What is the significance of consistency in research results?

Consistency in research results is essential for establishing trust in experimental outcomes and fostering success in medical investigations.